Home setup part 3: IWS

I have a strange list of requirements, and a limited amount of hardware to satisfy them with. I needed:

- a Windows desktop for windows only software and games

- a pfSense router

- a NAS

- a way to run and manage multiple docker containers and docker-compose stacks

- elastic stack

- a way to quickly spin up multiple VMs to test things

- an openVPN server

among other things

Between the GS30 and the desktop, I had enough resources for all of these. I already had ESXi on the GS30, so it made sense to install ESXi on the desktop and make a cluster. This didn’t pan out because of some USB passthrough issues I ran into on the desktop. After spending some time comparing alternatives, I settled on using ProxMox for its ability to manage LXCs along with KVM instances.

Installation

Installing ProxMox was very straightforward. There are a couple of things to keep in mind though. First, the slave nodes being added to a cluster must not have any VMs. Second, both the master and slaves must use the same storage system. For example, trying to add a slave with ZFS to a master with LVM will make the local ZFS on the slave unusable. Join information for the cluster can be generated on the master by navigating to Datacenter->Cluster->Create Cluster. The join information generated here can be pasted into the second node at Datacenter->Cluster->Join Cluster to add it to the cluster.

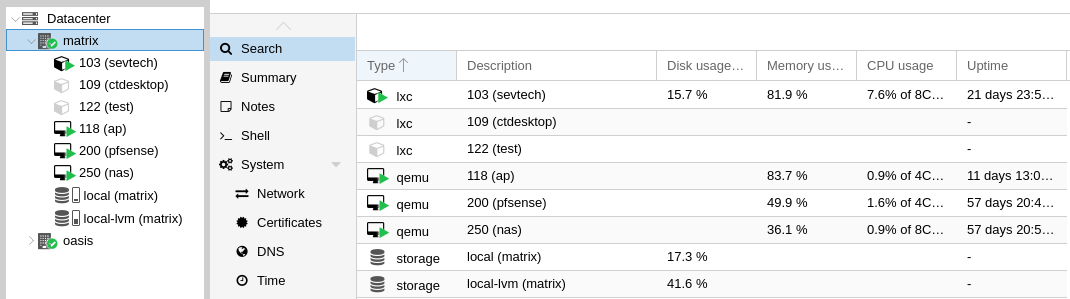

I have two nodes as of now, which I named after two of the most iconic virtual worlds, The Oasis from Ready Player One and The Matrix (from The Matrix, duh). Any VM or container that does not use hardware passthrough can easily be migrated between the nodes. There are a few VMs in my setup that can’t be moved because they depend on the hardware on a node.

VMs pinned to Matrix

pfSense

Very similar to my previous setup with ESXi, this VM has a dedicated NIC to which WAN is connected. This VM could probably be made migratable if I set up VLANs correctly, but my last attempt didn’t go so well. One issue I faced with ProxMox was that download speeds were abnormally slow. After hours of searching, I found this thread that suggests disabling “Hardware Checksum Offloading” in System->Advanced->Networking from the pfSense web interface. This change brought my WAN download speed back from 1Mbps to 100Mbps.

FreeNAS

Migrating FreeNAS from ESXi to ProxMox was easier than I had imagined. After creating a new FreeNAS VM in ProxMox, I simply edited the qemu conf and added a raw disk passthrough for the FreeNAS volume and imported it from the FreeNAS web interface. I had all of my data from the previous setup ready to share.

AP VM

Just like before, I haven’t gotten around to setting up a proper access point. I still use an ubuntu VM with the WiFi card passed through as an access point.

VMs pinned to Oasis

win

I read this post on r/pcmasterrace years ago and wanted to try it. It seemed like a good way to avoid having to dual boot Windows and Linux. Now that I finally got around to doing it, it works better than I had imagined.

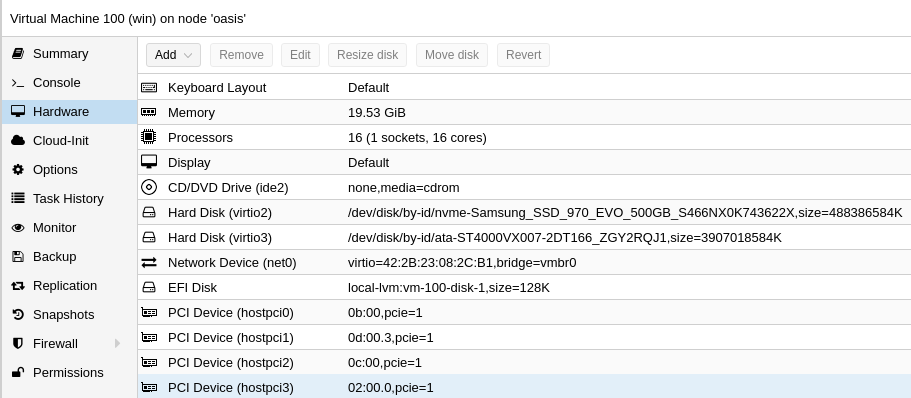

This VM has almost every important PCIe device passed through, including the 2080Ti, onboard audio and USB controllers.

Setting up passthrough was easy to set up simply by following the guide on ProxMox’s wiki.

There is a slight hit to game framerates inside the VM compared to bare metal, but the difference is insignificant and the benefits of this setup are too many for me to complain. I have directly passed through a 512GB NVMe SSD and 4TB HDD to the VM. The SSD was passed thorough as a disk rather than a PCIe device because the VM’s BIOS could not set the NVMe disk as a persistent boot option. Half of the SSD is used as the boot disk. The other half, along with a 2GB RAMdisk and the 4TB HDD form a tiered storage powered by primocache. PrimoCache works well, bringing the loading time for AC Odyssey down from 1:20 to 20 seconds.

Another benefit to having the disks passed through is that I can change boot device from BIOS and start windows bare-metal on the desktop if I ever need to.

Setting up passthrough was easy to set up simply by following the guide on ProxMox’s wiki.

There is a slight hit to game framerates inside the VM compared to bare metal, but the difference is insignificant and the benefits of this setup are too many for me to complain. I have directly passed through a 512GB NVMe SSD and 4TB HDD to the VM. The SSD was passed thorough as a disk rather than a PCIe device because the VM’s BIOS could not set the NVMe disk as a persistent boot option. Half of the SSD is used as the boot disk. The other half, along with a 2GB RAMdisk and the 4TB HDD form a tiered storage powered by primocache. PrimoCache works well, bringing the loading time for AC Odyssey down from 1:20 to 20 seconds.

Another benefit to having the disks passed through is that I can change boot device from BIOS and start windows bare-metal on the desktop if I ever need to.

I’ve set up NVIDIA gamestream to be able to play anything from my steam library over the internet when connected to my VPN. Now, I wouldn’t play games when I’m at work, but if I did, the experience would be great given the 6ms RTT.

One issue I have not resolved yet is that the system (even the hypervisor) locks up when I start this VM with the oculus headset or sensors plugged in.

GPUbuntu

When I got the 2080Ti, I decided to put my old 1070 to use powering a second linux VM. This VM runs ubuntu 18.04 with the latest NVIDIA drivers and nvidia-docker2. I can run GPU databases, tensorflow-gpu notebooks and blender render servers on this VM.

Migratable VMs

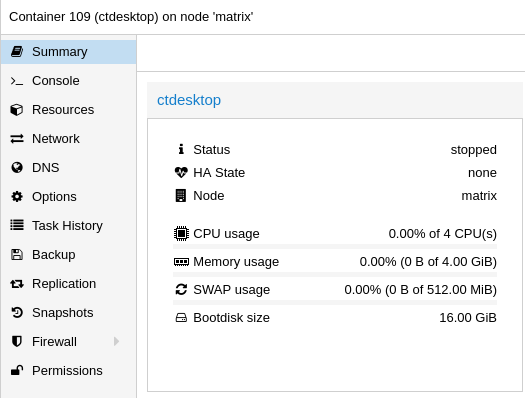

CTdesktop

A minimal container with XFCE, accessible only through VNC. Why? Why not?

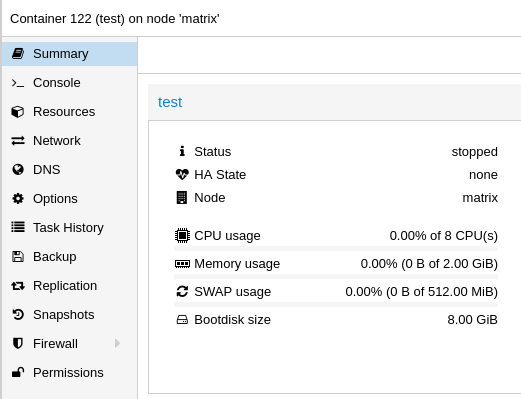

test

I don’t even remember why I made this.

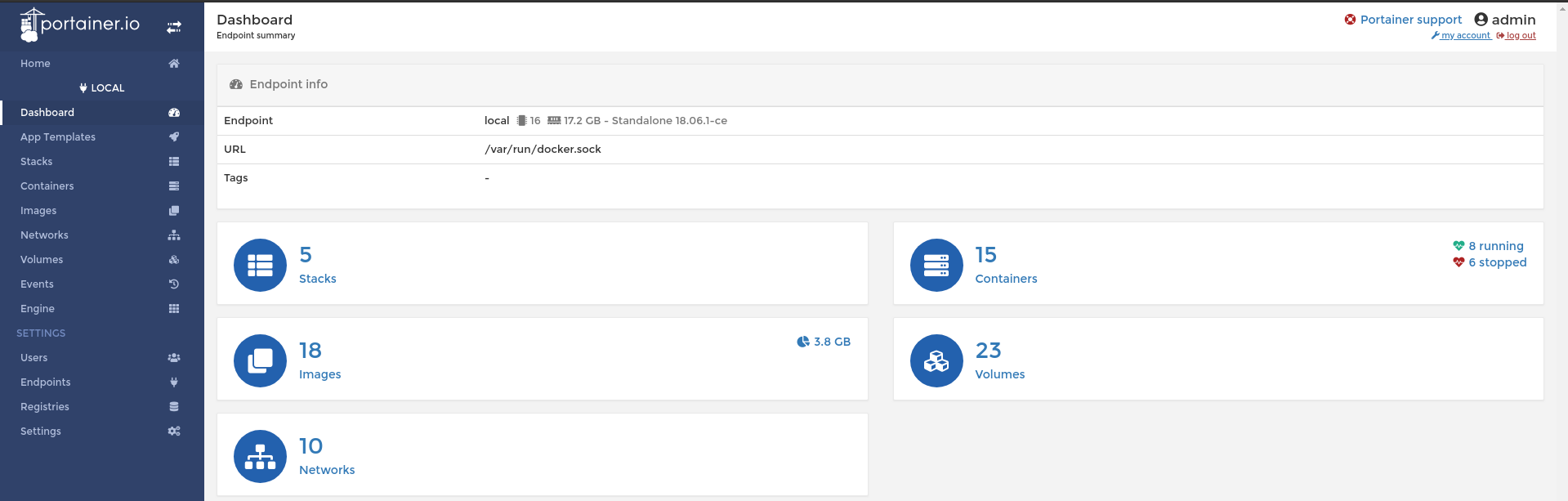

dockerhost

An LXC that runs Docker containers, managed through portainer. One benefit of switching form ESXi to ProxMox is that I can run Docker containers without the overhead of KVM on top of the hypervisor. This node required a bit of messing around to get working.

First, docker-ce needs to be installed on the Hypervisor for overlay2 and other modules to be available to the LXC. Once the LXC is created, the following three lines need to be added to /etc/pve/lxc/<id>.conf

lxc.apparmor.profile: unconfined

lxc.cgroup.devices.allow: a

lxc.cap.drop:

Finally, install Docker in the LXC by following any of the great guides by DigitalOcean.

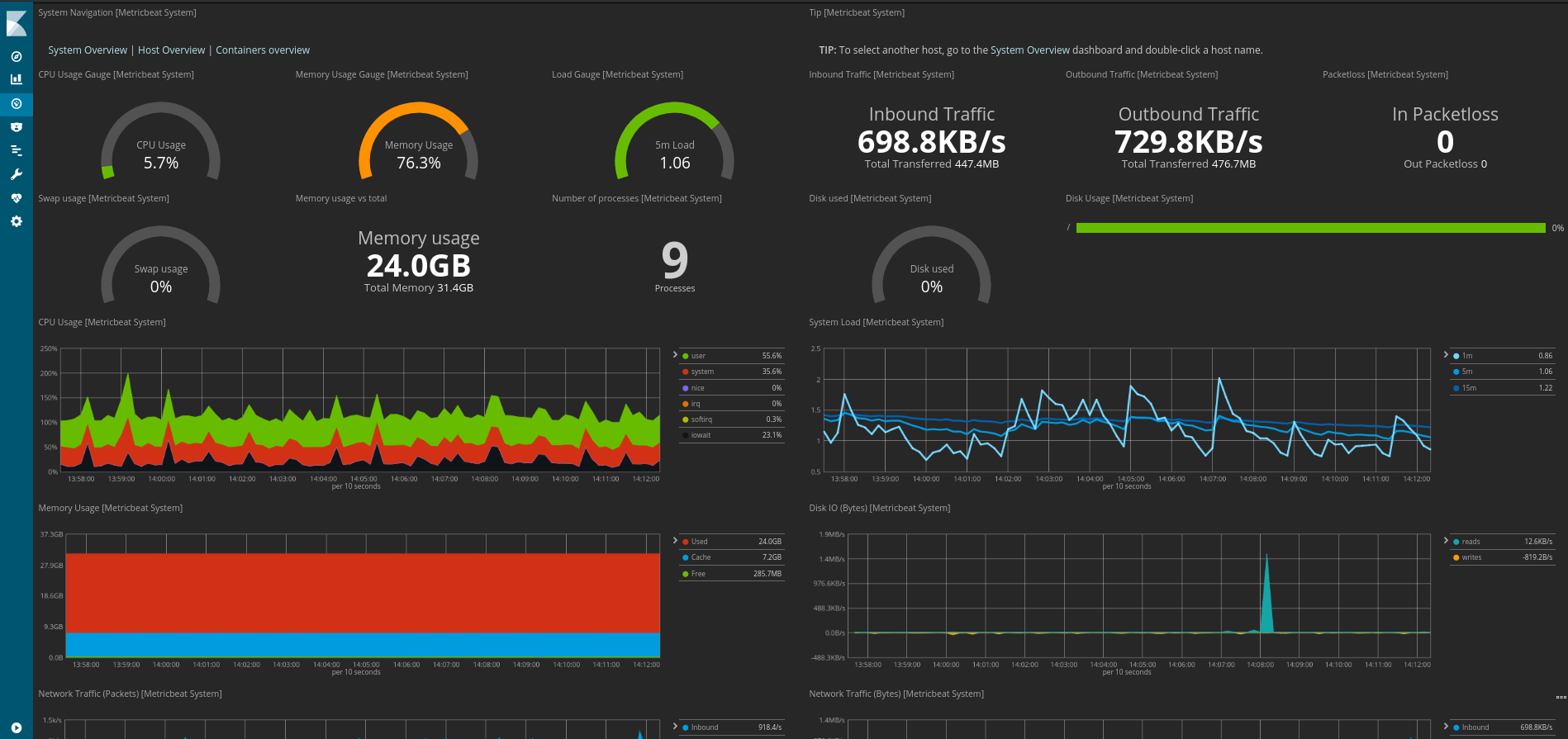

elastic

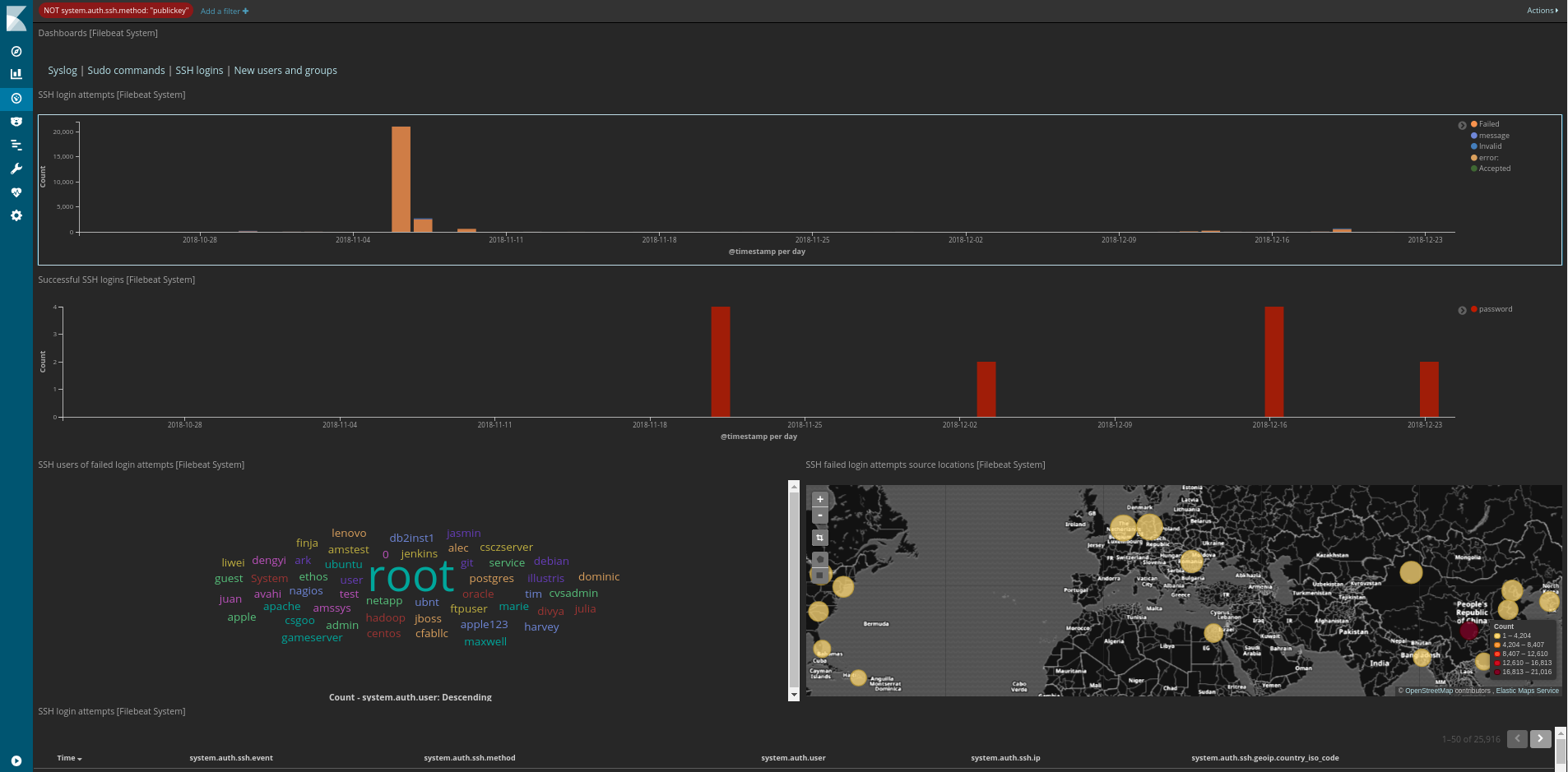

I was introduced to the elastic stack at work, and was impressed by how easy it was to set up detailed monitoring with metricbeat, filebeat, elasticsearch and Kibana. I use elasticsearch for monitoring performance, SSH/HTTP access and aberrant behaviour.

I found a lot of failed SSH attempts from all over the world, on non-standard SSH ports. This is why I never use password authentication for SSH on ports exposed to the internet.

After seeing the brute force attempts, I installed fail2ban on the VM to which SSH was forwarded.

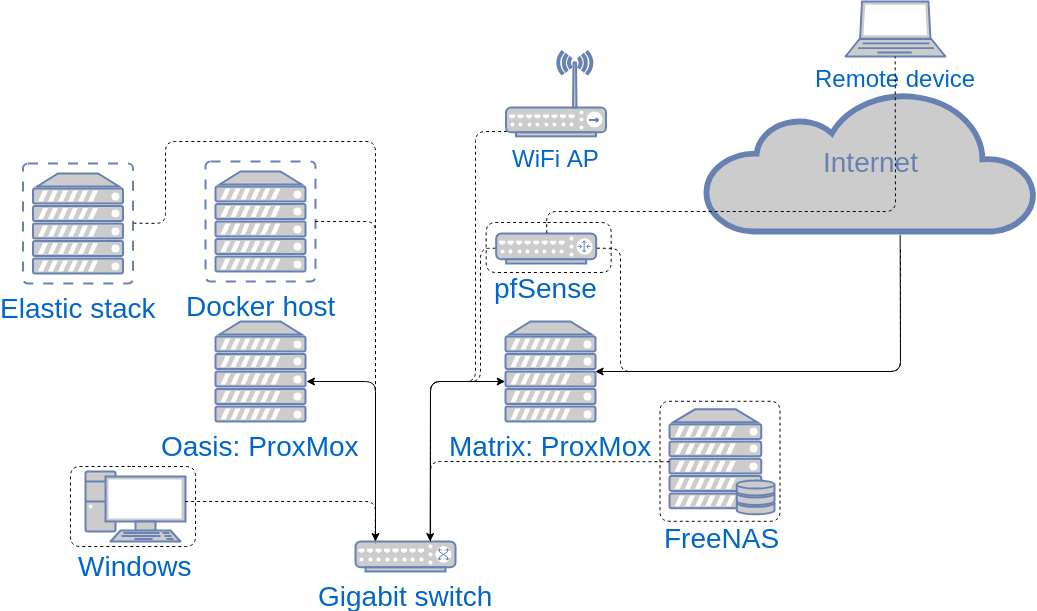

Network diagram

And this is what it all looks like in the end.